Query Fan Outs: How Grok searches the web

Grok doesn’t “discover” hotels. Across 200 runs for a Bastille 3-star coffee prompt, it triangulates with 3–4 fanout searches, heavily constrained to TripAdvisor and Booking, then expands “coffee” into “breakfast” and “reviews”. Here’s what that means for hotel AEO and how to measure it.

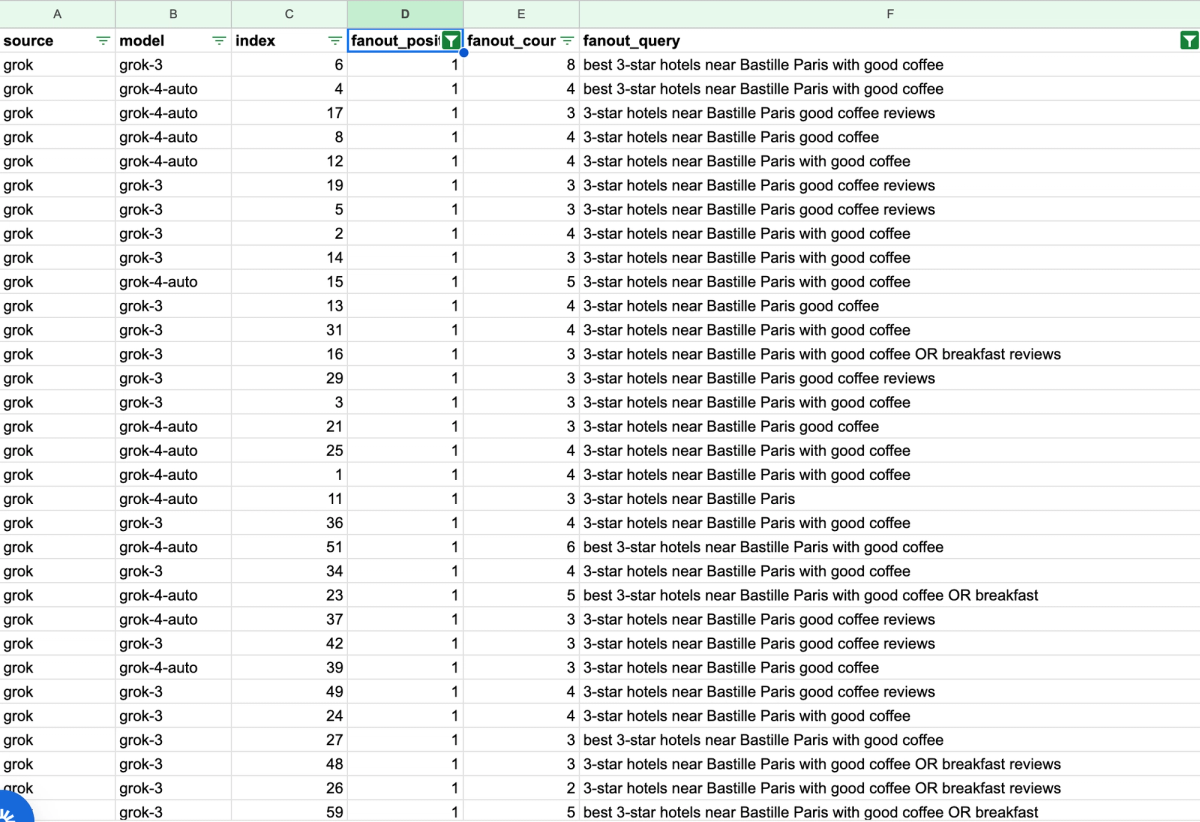

Grok Fanout Queries for Hotels: What 200 Runs Reveal

When you ask an LLM for hotel recommendations, it rarely does “one search”.

It breaks your prompt into multiple web searches (fanout queries). Think: one question, then a swarm of mini-intents 🐝

This time, we tracked Grok (xAI's model). Elon's model.

Prompt tested (200 times):

“Recommend a 3-star hotel near Bastille in Paris with good coffee”

What we captured:

- 200 runs

- 751 fanout queries total (avg 3.76 per run, min 2, max 9)

- 220 unique fanout queries → 29.3% uniqueness (avg repetition 3.4×)

1) What Grok’s fanouts look like in practice

Grok doesn’t “explore”. It triangulates.

The most common fanouts are extremely templated:

Top repeated fanouts

- 3-star hotels near Bastille Paris with good coffee (72×)

- best 3-star hotels Bastille Paris site:tripadvisor.com OR site:booking.com (66×)

- ... reviews site:tripadvisor.com OR site:booking.com (35×)

- ... good breakfast coffee (32×)

- ... good coffee reviews (30×)

This repetition is the tell: Grok is pinning the core constraints, then validating them with “authority sources”.

Fanout step 1: Restate the exact intent (very literal)

This is the “mirror” search. It keeps the user’s words intact.

Fanout step 2: Constrain to platforms (the big move)

Grok uses site: a lot. A google operator to scan a website directly from Google Search. Kind of old-fashioned SEO search (are we in 2015 again?).

- site: appears in 193 / 751 fanouts = 25.7%

- But more importantly: 179 / 200 runs = 89.5% include at least one site: fanout

And it targets the same two platforms:

- TripAdvisor appears 194 times in fanouts → 189 / 200 runs = 94.5% of runs consult it at least once

- Booking appears 182 times in fanouts → 181 / 200 runs = 90.5% of runs consult it at least once

- Hotels.com is basically noise: 5 / 200 runs = 2.5%

- Expedia is never scanned.

Which OTA is searched most by Grok?

This is not “open web discovery”. It’s “ask Booking and TripAdvisor" (and not the others!!).

Fanout step 3: Expand “coffee” into proxies (breakfast + reviews)

This is the sneaky part. “Good coffee” becomes “good breakfast”. “Reviews” to get the best ones.

- coffee appears in 449 / 751 fanouts = 59.8% and in 100% of runs (at least once)

- breakfast appears in 220 / 751 fanouts = 29.3% and in 187 / 200 runs = 93.5%

- reviews appears in 168 / 751 fanouts = 22.4% and in 161 / 200 runs = 80.5%

So Grok’s mental model is basically:

“coffee” → “breakfast experience” → “prove it with reviews” (and that it's good)

Grok: Word map of Fan Out Queries

3) The funniest part: Grok is an X product… and uses zero X !

Across all 200 runs:

- 0 X/Twitter posts (xpost_count = 0)

4) What this means for hotels AEO in the Grok niche

If Grok’s fanouts are this consistent, you can optimize in a very practical way:

A) Win the “platform step”

If TripAdvisor + Booking are consulted in ~90–95% of runs, then:

- Your presence, ratings, review freshness, and content there become part of your “AI visibility score”, whether you like it or not.

B) Build pages around the proxies (coffee → breakfast → reviews)

Grok is literally searching these phrases.

So content that performs well in AI retrieval will:

- mention coffee in a concrete way (not “great coffee!”)

- connect it to breakfast quality (beans, partner café, machines, service hours)

- reinforce with social proof (review snippets, press mentions, structured data)

C) Track Fanouts like keywords

Fanouts are the new keyword list.

Except they’re generated by the model itself.

So you can:

- cluster fanouts by theme (coffee, breakfast, reviews, location, star rating)

- create/adjust pages + schema to match

- rerun the same prompt weekly and measure:

- did fanouts shift?

- did your domain appear more?

- did citations diversify away from OTAs?